Understanding AI Agents: From Concept to Implementation

Oct 22, 2024 by Sabber ahamed

In my last blog post: LLM based Multi-agent Systems: The Future of AI Collaboration, I discussed how multi-agent systems work. In this post, I guide you through the journey of understanding and creating AI assistants, from fundamental concepts to practical implementation.

Table of Contents

- What is an AI Assistant?

- Core Components

- Architecture Overview

- Implementation Steps

- Best Practices

- Future Considerations

What is an AI Assistant?

An AI agent is a software system designed to interact with users in a natural, conversational manner while performing specific task/s. Usually, it is the large language model (LLM) at its core for the agent to understand user input, process information, and generate appropriate responses.

AI Agent should have the following characteristics:

- Natural Language Understanding (NLU)

- Context awareness

- Domain expertise

- Adaptive learning capabilities

- Multi-modal interaction support

- Past memory

- Access to outside information

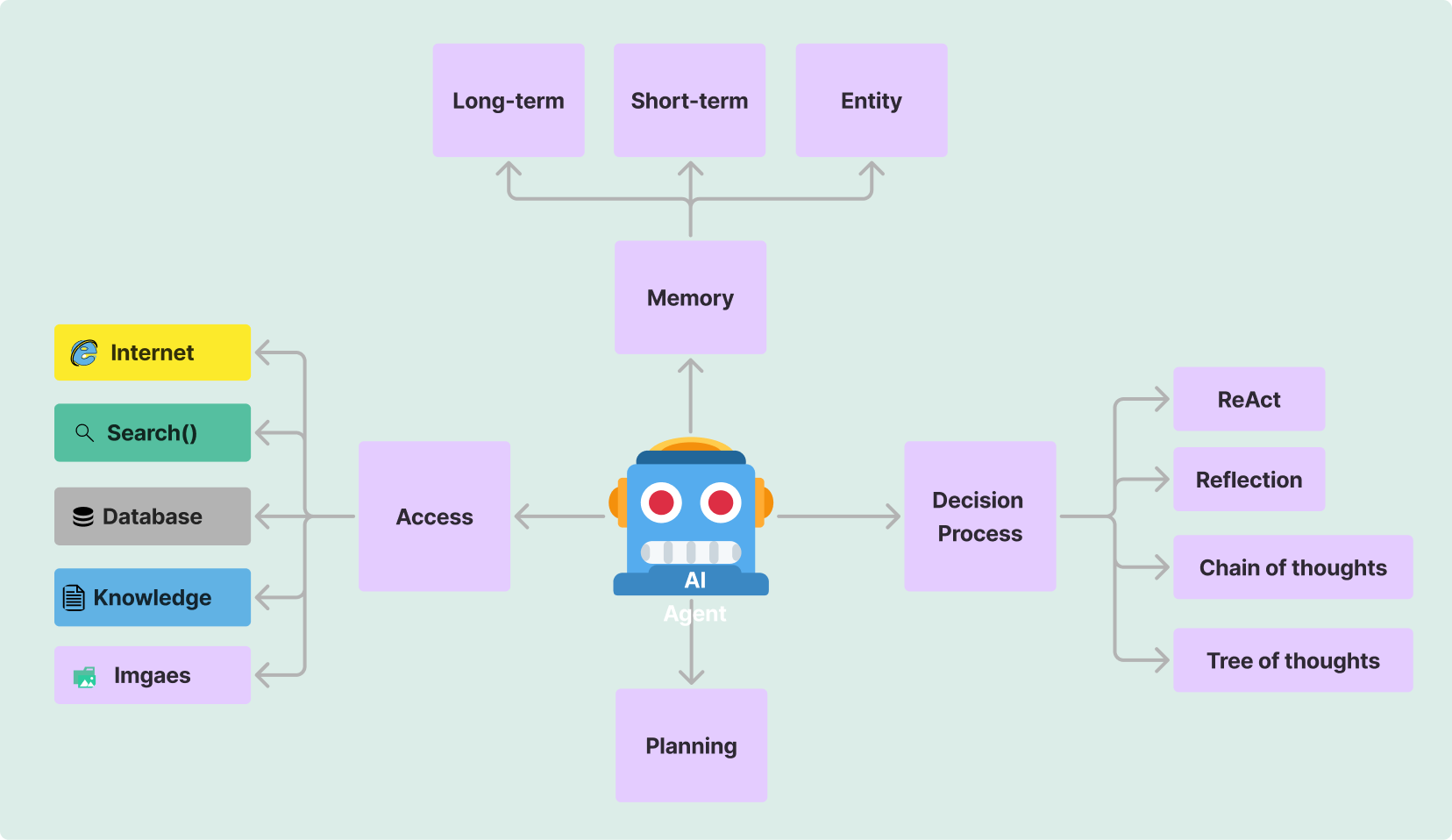

Core Components

Modern AI assistants consist of several essential components working together seamlessly:

1. Natural Language Understanding (NLU)

The NLU agent processes raw user input and extracts meaning. We can easily create a class that takes user input, processes it, and passes it to the intent classifier. Here's an example using Python:

def process_user_input(text):

# Tokenize the input

tokens = tokenize(text)

# Extract intent and entities

intent = classify_intent(tokens)

entities = extract_entities(tokens)

return {

'intent': intent,

'entities': entities,

'original_text': text

}2. Intent Classification

This agent works as a guide for the main agent. It determines what the user wants to accomplish and guides the main agent. Common intents might include:

- Information retrieval

- Task execution

- Clarification requests

- Small talk

- Does the user have any question

- Does the user answer previous questions etc?

3. Task Processing

This agent executes the required actions based on the user's intent and available tools or functions. Based on the user's users intent, the core agent can access available tools or external knowledge for more information.

class TaskProcessor:

def __init__(self, knowledge_base, context_manager):

self.knowledge_base = knowledge_base

self.context_manager = context_manager

def execute_task(self, intent, entities):

if intent == "information_request":

return self.knowledge_base.search(entities)

elif intent == "task_execution":

return self.perform_action(entities)

# Handle other intent types4. Get Past Histories

This memory agent plays a key role in the core agent's better performance. For a seamless conversation, we need to know the past events, dates, or key information. Based on the user input and intent, this agent provides relevant information to the core agent for appropriate response. I will explain in more detail in my next post about the role of Memory for better performance.

Implementation Steps

The following code snippets show a high-level implementation that can be extended to develop a multi-agent system. You do not need to use any framework, you need a few classes to organize your code.

- Setting Up the Foundation

from transformers import AutoTokenizer, AutoModelForSequenceClassification

class AIAssistant:

def __init__(self):

self.tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

self.model = AutoModelForSequenceClassification.from_pretrained(

"bert-base-uncased"

)

self.context_manager = ContextManager()

self.knowledge_base = KnowledgeBase()- Implementing Context Management

class ContextManager:

def __init__(self):

self.conversation_history = []

self.current_context = {}

def update_context(self, user_input, system_response):

self.conversation_history.append({

'user': user_input,

'system': system_response

})

# Update current context based on the interaction- Creating the Response Generator

def generate_response(self, intent, entities, context):

template = self.get_response_template(intent)

filled_response = self.fill_template(template, entities)

contextualized_response = self.apply_context(

filled_response,

context

)

return contextualized_responseBest Practices

Context Management

- Maintain conversation history

- Track user preferences

- Handle context switches gracefully

Error Handling

def safe_process_input(self, user_input):

try:

result = self.process_user_input(user_input)

return result

except Exception as e:

logging.error(f"Error processing input: {str(e)}")

return {

'error': True,

'message': "I apologize, but I couldn't process that properly."

}- Performance Optimization

- Implement caching for frequent queries

- Use batch processing where applicable

- Optimize model inference

Future Considerations

As AI Agents continue to evolve, several key areas will shape their development:

Emerging Trends

Multi-modal Interactions

- Voice + Text + Visual processing

- Gesture recognition

- Emotional intelligence

Advanced Learning Capabilities

- Continuous learning from interactions

- Personalization based on user behavior

- Adaptation to new domains

Enhanced Tool Usage

- Integration with external APIs

- Autonomous decision-making

- Complex task automation

Create Your Own Multi-agent System

We created getassisted.ai for building a seamless multi-agent system. You do not need to write any code. The goal is to create an assistant that helps you learn any niche topics. Whether you're a researcher, developer, or student, our platform offers a powerful environment for exploring the possibilities of multi-agent systems. Here is the link to explore some of the: assistants created by our users.

Conclusion

Building an AI assistant requires careful consideration of various components and their interactions. By following the structured approach outlined in this article and implementing best practices, you can create robust and effective AI assistants that provide value to users.

Remember that the field of AI assistants is rapidly evolving, and staying updated with the latest developments and technologies is crucial for creating state-of-the-art systems.